Related: Software engineering | CMPSC 360

Topic: Core concepts in compiler design and language theory - understanding how scanners use finite automata to tokenize source code.

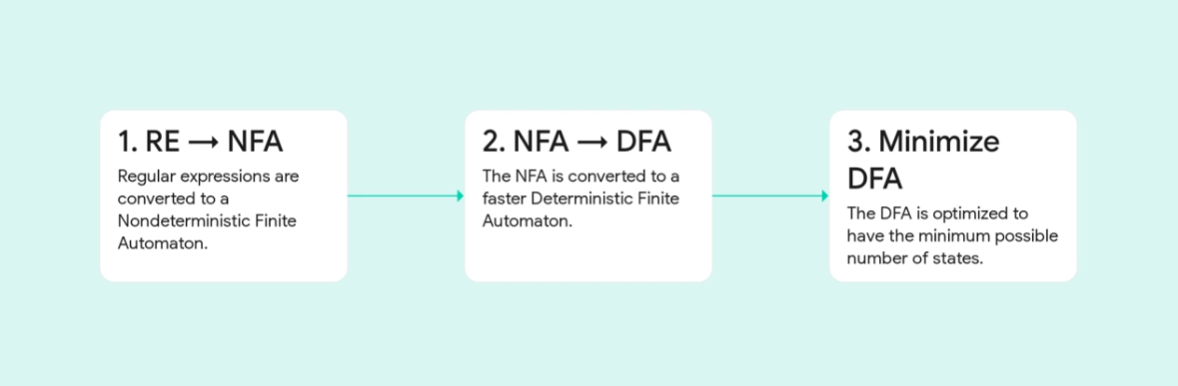

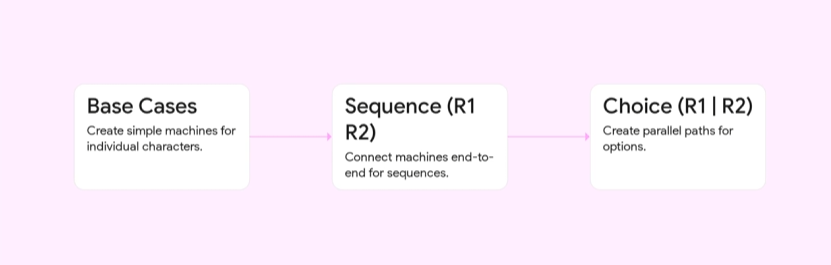

Implementing Regular Expression

Regular expressions are equivalent to finite automata

Scanners

A scanner is a lexer that converts source code into a stream of tokens. It’s just a really good rule follower (regular expression) using patterns to match tokens in the source code.

Finite Automaton

The rules the scanner follow can be converted into a conceptual machine called the finite automaton, its basically a flowchart that reads one character at a time.

NFA

Nonderministic (Multiple possible paths) Finite Automaton. Slower than a DFA

DFA

DFA Deterministic (One possible path) Finite Automaton. Faster than an NFA, but harder to construct.

Subset construction

The procedure to convert an NFA to a DFA. This involves creating states in the DFA that represent sets of states in the NFA.

direction LR

Convert

stateDiagram-v2 direction LR [*] --> A0 B1 A0 --> A1: A A1 --> B0: ε B0 --> B1: C

Practice

Hint: Build it as a combination of two simpler regex

Exactly two 0’s

Even number of 1’s

Then combine them together using the union operator.